If you’re searching for email A/B testing best practices, it’s time to look past subject lines. Opens matter, but most of your revenue (and also most of your unsubscribe risk) is created after the open – inside the email, and at the moment it lands.

In this article, we’ll concentrate mainly on content and timing, showing how mastering these elements in your emails can turn small experiments into big wins.

Why Testing Beyond Subject Lines Pays Off

Don’t get us wrong – subject lines absolutely deserve testing – they’re the fastest lever to pull, they’re easy to isolate, and they allow teams to easily see the difference in results before and after testing.

That said, subject line testing works best as your baseline discipline, not your only one. More lasting wins often come from testing what happens after the open: how your message is structured, what you ask people to do, when the email arrives, etc.

A practical way to think about it:

- Subject line tests help you earn the open.

- Content tests help you earn the click (and the conversion).

- Timing tests help you earn relevance because it strongly influences behavior.

So, here are your key takeaways to start testing:

╰┈➤ If you want your A/B program to drive durable improvements, expand your test plan from “what gets opened” to “what gets results.”

╰┈➤ When a subject line that won the test can usually be used once, the best content structure can be reused across campaigns and automations.

╰┈➤ The email is a decision environment. Subject lines get attention. Content creates intent. (Good) timing gives room for decision.

Think of the People! (A Crucial Note on Your Audience’s Character)

When designing emails (and especially when running A/B analysis), you must account for how homogeneous or diverse your audience is.

- A homogeneous audience (similar roles, expectations, and vocabulary) often produces clearer test results and faster convergence.

Examples: a B2B SaaS newsletter aimed solely at payroll managers; a professional association mailing list; a niche hobby brand with one flagship product category. - A diverse audience (a wide range of experience, goals, and preferences) can dilute results because different subgroups respond in opposite ways.

Examples: a national retail chain with many categories; a university alumni list; a media publisher covering a variety of topics.

And yes – every company has its own unique crowd, so do you! That’s why “industry benchmarks” are a starting point, not a verdict (and we find this quite comforting).

How to Segment and Choose the Right Tests

When you run an A/B test on your entire list, you get one “average” result: useful, but often misleading. Different subscriber groups can react in opposite ways, so the average may hide the real winner (or make a good change look neutral).

Segmentation helps you learn what works for whom, so you can scale wins confidently. Let’s see how.

Step 1: Choose one primary success metric

When you’re wondering what email marketing metrics to analyze, choose the one that matches the email’s job (and stick to one primary metric per test):

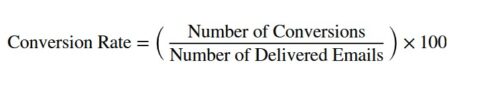

- Sales / promo email → conversion rate (primary) + overall ROI (business check)

- Content newsletter → click-through rate (CTR) or click rate (primary engagement signal)

- Activation / onboarding → click-through rate (CTR) → conversion rate (did they complete the action?)

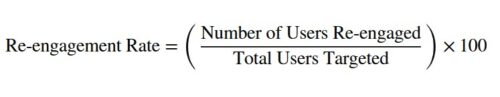

- Re-engagement → open rate (are they back?) + watch unsubscribe rate and spam complaint rate as guardrails

- Deliverability-focused tests (list hygiene, sending patterns) → bounce rate as an early warning signal

Step 2: Build segments that map to intent (what people want and do), not just demographics

Basic demographics (age, location, job title) can be useful, but they don’t always explain why someone clicks or buys. Stronger segments usually come from intent signals: where the subscriber is in their relationship with you, what they’ve done recently, and what they’ve shown interest in.

High-performing segmentation often uses:

- Lifecycle: new subscriber, active customer, lapsed customer

- Behavior: what they browsed or bought, how recently they purchased, how often they open/click your emails

- Preference signals: selected topics, preferred sending frequency, language choice

- Customer value: average order value (AOV), high-value customers, discount-seekers vs full-price buyers

Step 3: Decide what you’re testing

For advanced A/B testing, keep the test scope narrow:

- One hypothesis

- One primary metric

- One audience (or explicitly separate segments)

If you test five things at once, you won’t learn why something worked.

Step 4: Make your test statistically and operationally “fair”

- Randomize assignment within the segment.

- Run the test long enough to cover day-of-week effects (often 3–7 days for newsletters; longer for low-volume lists).

- Avoid overlapping tests on the same people, meaning running multiple tests on the same subscribers at the same time (contamination is real). If someone is in two experiments, you won’t know which change caused the result, and the tests can skew each other.

- Watch sample size: tiny lifts require big lists; for small lists, aim for bigger, more meaningful changes.

Step 5: Build a “mix” of tests, not just one big bet

It’s tempting to hunt for one perfect A/B test that changes everything. In practice, the strongest results come from running a balanced set of smaller tests, because different levers improve different outcomes, and you learn faster.

Instead of chasing one “perfect” test, run a balanced portfolio:

- Basics (foundation tests): test the email’s structure, CTA, and layout. These often bring the biggest, easiest wins—and you can reuse the learnings in many campaigns.

- Offer tests: test what you’re selling and how you frame it (bundles, minimum spend thresholds, urgency messaging). These are most likely to move revenue.

- Timing tests: test when emails go out (send time, delays in automations, how often you send). This improves relevance and can also support deliverability.

- Personalization tests (dynamic blocks, recommendations) → to lift results for specific segments

Need to dig more about segmentation?🌕 Here’s our big summary of segmentation in email marketing to get you started: Email Segmentation: The Complete Overview 🌒 To go into more detail about grouping your subscriber base, read our recent article on microsegmentation: Micromarketing Secrets: How Analyzing Small Email Segments Can Deliver Big Results |

Real-World Tests for Content and Timing

Below are practical, “you can run this next week” examples with A and B variants, written as mini playbooks.

Example 1: CTA clarity vs cleverness

Hypothesis: A specific call-to-action (“See sizes & delivery dates”) will beat a generic CTA (“Shop now”).

| 🅰️: “Shop now” button under product grid |

| 🅱️: “See sizes & delivery dates” button + one sentence that reduces friction (“Delivery in 2–3 days. Free returns.”) |

Primary metric: revenue per recipient (or conversion rate)

Best for: e-commerce, booking, SaaS trial nudges

Example 2: Above-the-fold hierarchy

Hypothesis: Putting the value proposition before the hero image improves mobile clicks.

| 🅰️: Hero image → headline → copy → CTA |

| 🅱️: Headline + 2-line benefit → CTA → hero image (smaller) |

Primary metric: click-to-open rate

Pro tip: segment by mobile vs desktop; hierarchy changes often behave differently.

Example 3: Short vs long copy (but only for the right segment)

Hypothesis: Long-form storytelling in emails increases conversion for high-intent customers, but hurts cold leads.

Segments:

- Segment #1: clicked in the last 30 days (warm)

- Segment #2: no clicks in 90 days (cold)

| 🅰️ (short): short copy + offer → CTA |

| 🅱️ (long): story-led email or problem & solution + proof + offer → CTA. |

| ⚠️ Still one primary CTA for both – just more context before it |

Primary metric: conversion rate (for segment #1: warm) / re-engagement rate (for segment #2: cold)

Likely outcome: different winners per segment—and that’s the point.

How to read the results?

If variant 🅱️ (long) wins in Segment #1 (warm): your engaged audience wants context and proof before acting.

If variant 🅰️ (short) wins in Segment #2 (cold): low-effort, quick messaging gets them clicking again.

If results don’t match the hypothesis (e.g., long wins for cold or short wins for warm): treat it as a signal about your audience. Keep the winner for that segment and run a follow-up test (e.g., “medium-length” copy or a different proof element) to learn why.

Example 4: Offer framing – percentage vs threshold

Hypothesis: For shoppers whose carts are close to a free-shipping threshold, “Free shipping over €X” will drive more purchases than “-10% today”.

╰┈➤Predefine segments + measurement plan upfront, then judge results accordingly. Before sending: decide what “near-threshold” means (e.g., within 10–20% of €X) and tag/segment those recipients.

Segments (set these up before you pick a winner):

- Segment #1: near-threshold carts (e.g., cart value is within ~10–20% below €X)

- Segment #2: far-from-threshold carts (meaningfully below €X)

| 🅰️ (discount framing): Offer block headline + CTA: “Get -10% today” + short supporting line. |

| 🅱️ (threshold framing): Offer block headline + CTA: “Free shipping over €X” + cart-based reminder copy (e.g., “You’re €12 away from free shipping.”) |

| ⚠️ Same subject line, same products/cart items shown, same layout, same send time. Only the offer block changes. |

Primary metric: conversion rate (secondary check: email marketing ROI)

How to read the results?

If variant 🅱️ (threshold framing) wins in Segment #1 (near-threshold): keep free-shipping framing there – it reduces friction and gives a clear goal.

If variant 🅰️ (short) wins in Segment #2 (far-from-threshold): a discount often feels more achievable than “spend more to save.”

If the overall winner is unclear, don’t default to the average – choose winners per segment (it’s common for A to win in one and B in the other).

If results contradict the hypothesis (e.g., discount wins near-threshold), treat it as an audience signal and run a follow-up test (e.g., different €X message wording, smaller threshold, or “€ off” instead of “% off”).

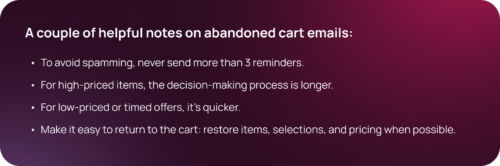

Example 5: Timing test for abandoned cart series

Abandoned cart emails work because they arrive when intent is still warm – and timing is often the difference between “nice reminder” and “too late.” Let’s put it to the test – what time suits your clients the best?

Hypothesis (pick one, then test):

-

H1: A faster first reminder (10 minutes) lifts conversion rate because intent is at its peak.

-

H2: A slightly later first reminder (1 hour) lifts conversion rate because it feels less pushy and catches people when they’re back at their device.

| 🅰️: Email #1 at 10 minutes |

| 🅱️: Email #1 at 1 hour |

| ⚠️ Keep the rest identical in both variants – for example: Reminder #2 after 24h and Reminder #3 after 72h, with the same content and offers. That way, you’re really testing timing. |

Primary metric: conversion rate (secondary check: email marketing ROI)

Example 6: Send-time windows for newsletters

Hypothesis: morning wins for busy professionals; evening wins for hobby readers.

Segments :

- Segment #1: profession-driven

- Segment #2: interest-driven

╰┈➤ Create segments based on your specific audience; consider how you could categorize them into two larger groups.

| 🅰️: Tuesday, 09:00 |

| 🅱️: Tuesday, 20:30 |

Primary metric: click-to-open rate

What advanced teams do: keep content identical for 2–4 sends, then rotate days to rule out day-specific bias.

Example 7: Personalization depth

Customers now expect offers tailored to their interests. However, excessive personalization can lead to discomfort or a sense of invasion of privacy. A/B testing can help you find the right balance for your distinct group of subscribers.

Hypothesis: Light personalization (one relevant block) increases click-through rate (CTR) and conversion rate, while heavy personalization can backfire, reducing trust and increasing unsubscribe rate for some audiences.

| A (no personalization): Generic email with “Top picks this week” (same products for everyone). |

| B (light personalization): One dynamic block: “Picked for you” based on [category viewed] or [last purchase category] + clear wording like “Recommended based on your browsing.” |

C(heavy personalization): Multiple personalized elements, e.g.

|

| ⚠️ What stays the same: same subject line, same offer, same layout, same send time. Only personalization depth changes. |

Primary metric: click-through rate (CTR) + conversion rate

How to read the results?

If variant B wins: you’ve found the sweet spot – keep one relevant dynamic block.

If variant C wins: your audience is comfortable with deeper personalization; scale it carefully and keep transparency.

If A beats B/C: personalization isn’t trusted or isn’t relevant enough – improve data quality, reduce specificity, or personalize by broader interest groups instead.

If you want to keep it strictly A/B (no C), you can compare B (light) vs C (heavy) once you’ve already proven personalization helps at all.

Example 8: wording approach – benefits-first vs features-first

Finally, let’s get back to the point and look at how to test a style. Some audiences decide fast when you lead with the outcome (“what’s in it for me”). Others need specifics first (“what exactly do I get?”). Learn which style fits your subscribers.

Hypothesis: A benefits-first message increases click-through rate (CTR) for broad or mixed audiences, while a features-first message performs better for expert or comparison-minded audiences.

| 🅰️: (benefits-first): Open with 1–2 outcome statements → quick proof/credibility → feature details → CTA (Example lead: “Save 2 hours a week on reporting—without changing your workflow.”) |

| 🅱️(features-first): Open with 3–5 concrete features/specs → short “why it matters” benefit line → CTA (Example lead: “Includes X, Y, and Z. Works with A. Exports to B.”) |

Primary metric: click-through rate (CTR) (secondary check: conversion rate)

How to read the results?

If 🅰️ (benefits-first) wins: your audience tends to respond better to outcome-led messaging – lead with benefits more often, and keep details lower in the email.

If 🅱️ (features-first) wins: your audience tends to prefer specifics up front – start with features (especially for higher-consideration decisions), then connect them to a clear benefit.

If the result is mixed or only slightly in favour of one variant – that’s often a sign your list contains different reader types. You can keep the overall winner for now, but if you want deeper learnings, repeat the test inside a few key segments (e.g., engaged vs less engaged, new vs long-term subscribers, customers vs leads) and see whether the preferred wording style changes.

In Conclusion: Your Next Send Just Got a Lot More Interesting!

Congratulations, you’ve reached the end of this article! Yes – at first, the advanced A/B testing techniques may seem like something you wouldn’t want to add to your bag of tricks, but the knowledge you gain from this will have a long-lasting impact on the effectiveness of your email marketing.

If you treat subject lines as the “front door,” content and timing are everything inside the store: layout, signage, helpful staff, and the moment the customer walks in. That’s where advanced A/B testing becomes a real growth engine – especially once you respect segmentation and the realities of a mixed audience.

Once you start testing with this level of intention, email A/B testing stops being an experiment and starts being a competitive advantage.